05 - Stabilization

A geoemtric approach to fundamental characterization of system-theoretic limitations in online learning and control

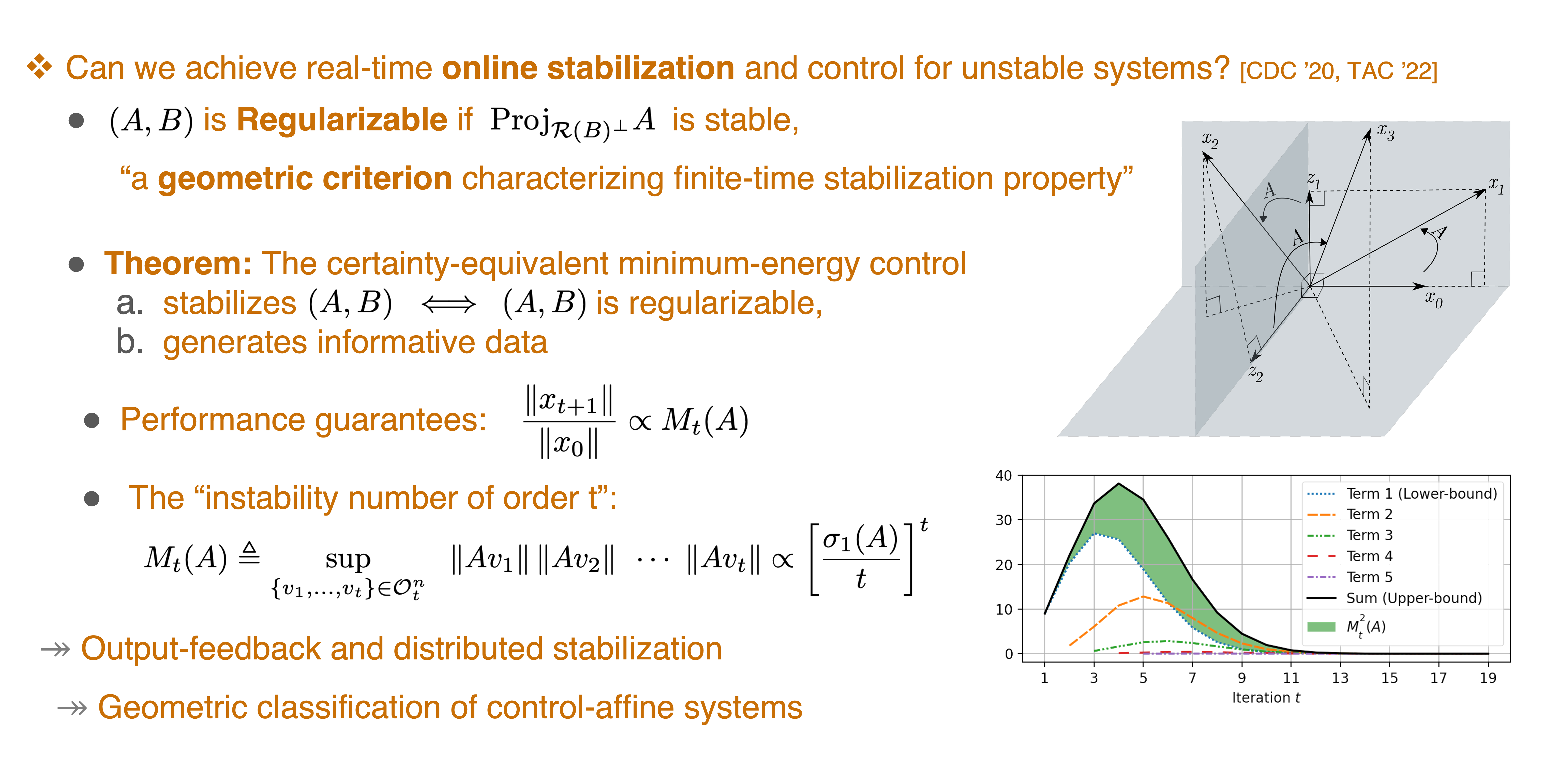

Conventional stabilization techniques typically assess control effectiveness asymptotically and often rely on assumptions like knowing a priori that the initial policy is stabilizing or having persistently exciting input-output data. However, many modern applications require high performance in real-time, relying on online data streams. This shift to online stabilization and decision-making highlights the need for a fundamental characterization of system-theoretic limitations in online learning and control from a finite-time perspective. I introduced the concept of regularizability for linear systems, which measures the ability to regulate a system in finite time (Talebi et al., 2020), contrasting with the traditional asymptotic notions of stabilizability and controllability. Building on this, I proposed a time-varying feedback synthesis procedure that not only regulates the system's state but also generates informative data for use in data-driven control and system identification.

This approach has opened up a number of intriguing design problems using streaming data; for example, it has characterized the properties required to regulate an unstable system on the fly (Talebi et al., 2022), e.g., an aircraft whose previous control policy has become unstable due to damage or misidentification. In particular, it characterizes a fundamental hardness in any online data-driven stabilization problem.

Key references:

-

Talebi, S., Alemzadeh, S., Rahimi, N., & Mesbahi, M. (2020). Online Regulation of Unstable Linear Systems from a Single Trajectory. IEEE Conference on Decision and Control (CDC), 4784–4789.

-

Talebi, S., Alemzadeh, S., Rahimi, N., & Mesbahi, M. (2022). On Regularizability and its Application to Online Control of Unstable LTI Systems. IEEE Trans on Automatic Control (TAC), 67(12), 6413–6428.