Learning and Geometry

Constrained policy optimization on submanifolds of stabilizing controllers

In this project, we study optimization over the submanifolds of stabilizing controllers, equipped with a Riemannian metric arising from constrained Linear Quadratic Regulators (LQR) problems.

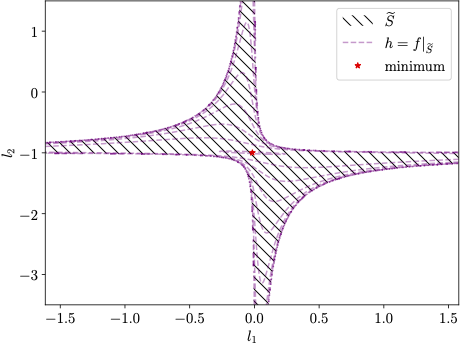

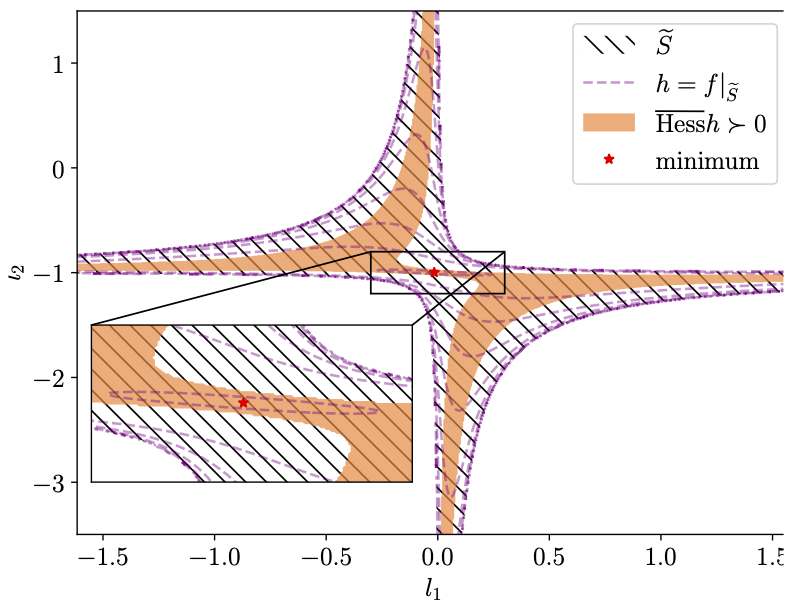

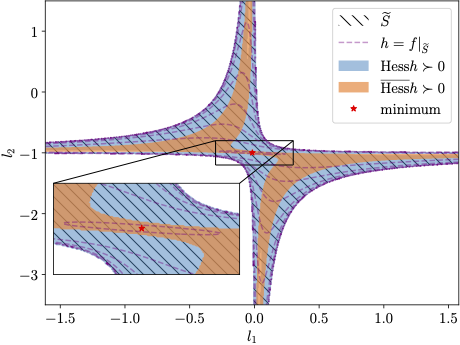

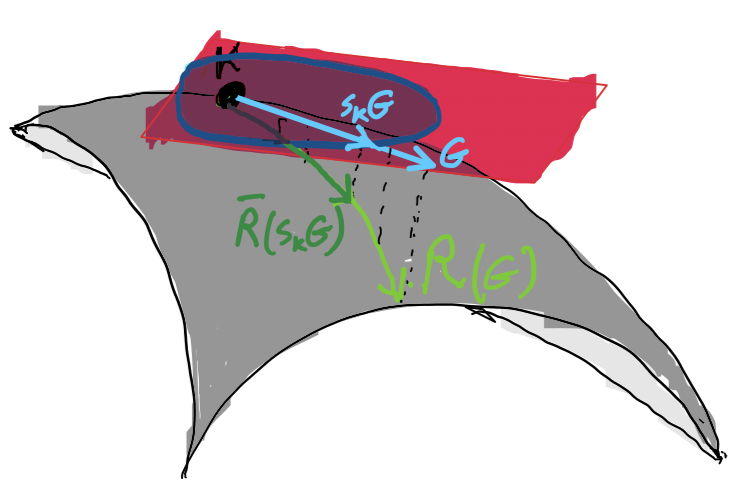

Let us illustrate this non-Euclidean geometry in the following example which arises from the structure of LQR problem:

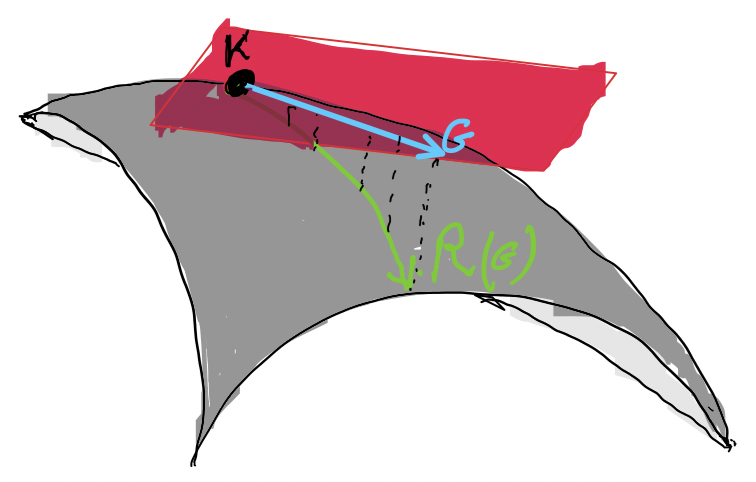

We provide a Newton-type algorithm that enjoys local convergence guarantees and exploits the inherent geometry of the problem. Instead of relying on the exponential mapping and in the absence of a global retraction, this algorithm revolves around the developed stability certificate as well as the linearity of the constraints. This idea can be illustrated in the following abstract form:

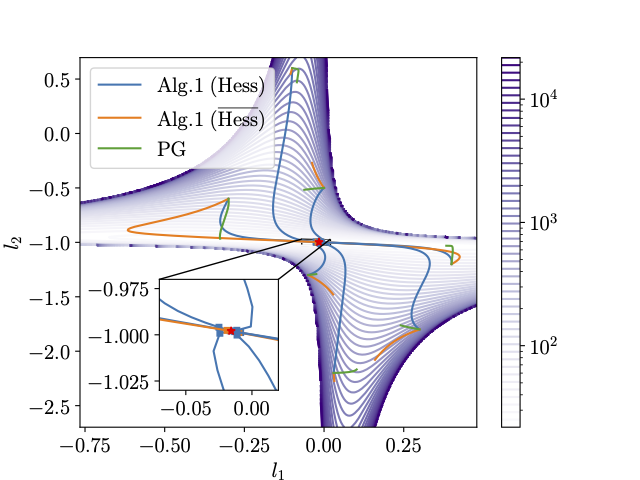

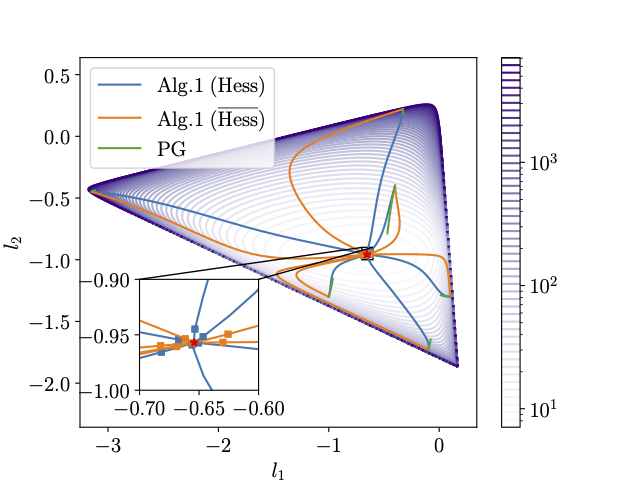

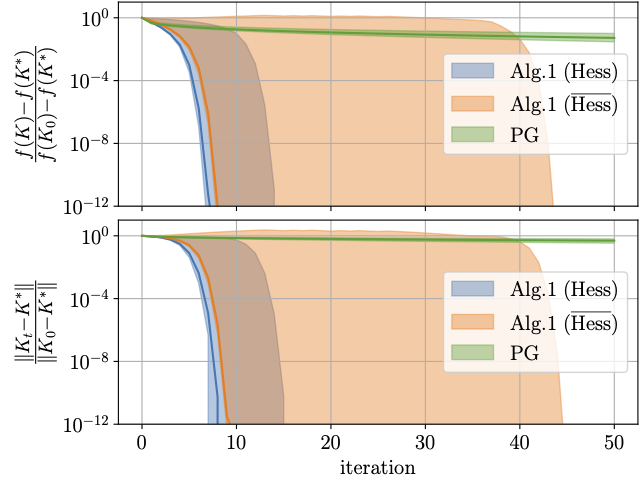

Let us now exemplify the proposed algorithm through numerical simulations in the context of Structured and Output-feedback LQR problems.

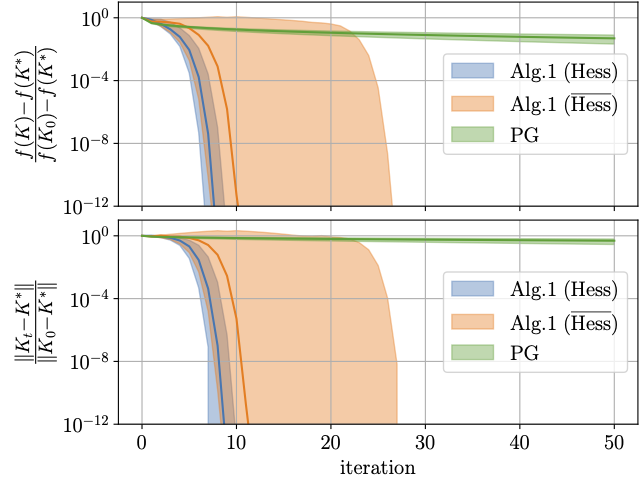

To better see the advantage of using the inherent non-Euclidean geometry, we can examine the performance this algorithm for 100 randomly samples parameters for these problems:

For more details and convergence guarantees, see this paper.